Colleagues,

Those of us living in the Ottawa area got

seriously ripped off on Tuesday. It was cloudy, and we missed the best

light show in a decade.

Late on Sunday 22 January, the Sun erupted with

an M8.7 class solar flare. The resulting coronal mass ejection

(CME), a burst of highly energetic protons, left the Sun's surface at about

2,000 km per second, and impacted the Earth at a little after 1000 hrs EST

on Tuesday. The Space Weather Prediction Centre at the National Oceanic

and Atmospheric Administration in the US rated the storm as an S3 (the scale

goes up to S5), making it the strongest space weather event since 2005,

possibly 2003. The resulting geomagnetic storm was rated G1 (minor).

Thanks to our atmosphere, solar storms can't

harm humans, but when charged particles hit the Earth's magnetosphere, they can

play hob with radio communications, and at high energy levels can damage

satellites. They also produce bursts of charged particles in the upper

atmosphere, leading to tremendous auroral displays. NASA had predicted

that the Aurora Borealis could be visible at latitudes as low as

Maine. The following image was taken in Sweden:

See what I mean? Awesome.

Elsewhere, the aurora was reported to have looked like a rainbow in the night

sky. Too bad we missed it here.

Who cares, though, right? I mean, it's

pretty, sure, but where's the relevance to what we do? Well, this

kind of solar activity is strategically very relevant, in a couple of ways.

First, powerful geomagnetic storms can impact humans - not through ionizing

radiation, but through their immediate electromagnetic effects.

Geomagnetic perturbations can induce powerful currents on Earth. Here's a

plot from a Swedish lab showing the arrival of the CME:

Massive geomagnetic events can wreak havoc with

power grids because, pursuant to Faraday's Law, a time-varying magnetic

field induces a current in a conductor - and the surface of the world is

positively covered

with conductors. A strong enough magnetic perturbation can create enough

current in high-tension lines to overload switches and cause circuit breakers

to blow. Take a look at the magnetometer declination on that chart; last

Tuesday's event induced a perturbation about 8 times the average maximum

perturbation experienced over the hours preceding the event. Frankly, the

effects shouldn't be surprising; we're talking about billions of tonnes of

charged particles hitting the planet at millions of kilometres per hour.

The kinetic energy alone of such an impact is staggering. It's enough to

bend the planet's magnetosphere out of shape.

There weren't any reports of power grid

failures on Tuesday, but remember, this was only a G1 storm. A

CME that hit the Earth on 13 March 1989 knocked out Quebec's power grid for

nine hours. That one temporarily disabled a number of Earth-orbiting

satellites, and folks in Texas were able to see the northern lights.

Another big geomagnetic storm in August of that same year crippled

microchips, and shut down the Toronto Stock Exchange.

Those were bad, but the Carrington Event

was something else entirely. Back on 1 September 1859, British

astronomers Richard Carrington and Richard Hodgson independently recorded

a solar superflare on the surface of the Sun. The resulting CME took only

18 hours to travel to the Earth, giving the mass of charged particles a

velocity of ca. 2300 km/second, somewhat faster than last Tuesday's bump.

When the CME hit, it caused the largest geomagnetic storm in recorded

history. According to contemporary accounts, people in New York City were

able to read the newspaper at night by the light of the Aurora Borealis, and

the glow woke gold miners labouring in the Rocky Mountains. The more

immediate impact of the Carrington Event, though, was the fact that the intense

perturbations of the Earth's magnetic field induced enormous currents in the

telegraph wires that had only recently been installed in Europe and across

North America. Telegraph systems failed all over both continents;

according to contemporary accounts, sparks flew from the telegraph towers,

telegraph operators received painful shocks, and telegraph paper caught fire.

Geomagnetic storms like the Carrington Event

are powerful enough to leave traces in the terrestrial geology. Ice core

samples can contain layers of nitrates that show evidence of high-energy proton

bombardment. Based on such samples, it's estimated that massive solar

eruptions like the Carrington Event occur, on average, every five hundred years

or so. Electromagnetic catastrophists (I've derided them before as

"the Pulser Crowd") point to the Carrington Event, and lesser impacts

like the 1989 solar storm, as an example of the sort of thing that could

"bring down" Western civilization by crippling power grids (and they

also tend to posit that a deliberate EMP attacker could accomplish the same

thing via the high-altitude detonation of a high-yield thermonuclear

weapon over the continental US - as if the US strategic weapons system weren't

the one thing in the entire country that was hardened against EMP from top to

bottom). It hasn't happened yet - and the protestations of the Pulsers

notwithstanding, the grid is a lot more robust than the old telegraph lines

used to be, if only because we understand electromagnetism a lot better now

than they did back in the days of beaver hats, mercury nostrums, and stock

collars.

That said, this sort of thing does tend

to make one look at our ever-benevolent star in a new light (no pun

intended). The second reason this sort of thing is strategically relevant

is because recent studies and observed data seem to be confirming

some disturbing solar activity trends identified a few years ago - and the

potential consequences aren't pleasant.

As I've mentioned before, one of the perplexing

claims in the IPCC's list of assumptions upon which all of the general

circulation ("climate") models are built is that the aggregate impact

of "natural forcings" (a term that the IPCC uses to lump together

both volcanic aerosols and all solar forcings) is negative - i.e., that the sum

total of volcanic and solar activity is to cool the Earth, rather than warm

it. The IPCC also argues that these natural forcings “are both very

small compared to the differences in radiative forcing estimated to have

resulted from human activities.” [Note A] The assumption that solar activity is

insufficient to overcome the periodic cooling impact of large volcanic

eruptions (which, let's face it, aren't all that common - the last one to

actually have a measurable impact on global temperatures was the eruption of

Mt. Pinatubo on 15 June 1991) is, to say the least, "unproven."

The IPCC has also dismissed the Svensmark hypothesis (the argument that

the warming effect of seemingly minor increases in solar activity is

magnified by the increase in solar wind, which interrupts galactic cosmic

radiation and prevents GCRs from nucleating low-level clouds, thereby reducing

Earth's albedo, and vice-versa - you'll recall this from previous

COPs/TPIs), which, unlike the hypothesis that human CO2 emissions are

the Earth's thermostat, is actually supported by empirical evidence.

This is probably why the IPCC's climate models

have utterly failed. Temperature trends are below the lowest IPCC

estimates for temperature response to CO2 emissions - below, in fact, the

estimated temperature response that NASA's rogue climatologist, James Hansen,

predicted would occur even if there was no increase in CO2 emissions after

2000.

(Dotted and solid black lines - predicted

temperature trends according to Hansen's emissions scenarios. Blue dots -

measured temperatures. Red line - smoothed measured temperature trend.)

The lowest of the black dotted lines is what

Hansen predicted Earth's temperature change would be if global CO2 emissions

were frozen at 2000 levels. Obviously, that hasn't happened; in fact,

global CO2 concentrations have increased by 6.25%. Temperatures haven't

increased at all. In other words, while CO2 emissions have continued to

skyrocket and atmospheric CO2 concentrations have continued to

increase, actual measured temperatures have levelled off, and the

trend is declining. Don't take my word for it; the data speak for

themselves.

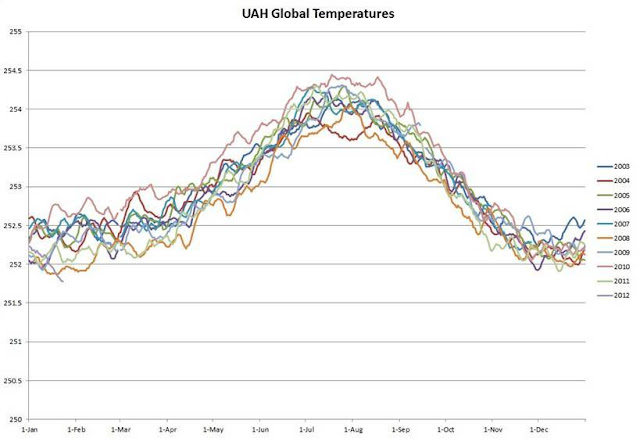

I particularly like the next graph, which

shows the last 10 years of average global temperature trends as measured by the

most reliable instruments available to mankind:

The satellite temperature measurement data are

maintained by the University of Alabama at Huntsville, and the short puce

line at the left of the graph shows 2012 temperatures to date.

That's right - according to satellite measurements, this is the coldest winter

in at least a decade. Have you heard anything about

that from

the mainstream media? Have you heard anything about it from NASA?

Probably not; Hansen just released another statement shrieking that 2011 was

the "11th-warmest" year on record. This was after modifying the

GISS temperature dataset - again - to make the past colder, and the present

hotter. Seriously, where apart from climate science is it considered

acceptable to change the past to conform to your theories about the

future? Well, apart from communist dictatorships, I mean.

Why aren't the data following the

GCM predictions? Well, let's look back a few years. In a paper

I wrote back then, I took a look at what solar activity trends had to suggest

about the likelihood of continued, uninterrupted global warming. Here's

an excerpt. Bear with me here, and please excuse the dated charts; the

paper, after all, was written in January 2009, and updated in

April 2009, using sources and data available to that point:

Solar physicists have

begun to speculate that the observed, and extremely slow, start to solar cycle

24 may portend an unusually long, weak solar cycle. According to NASA, in 2008 the Sun experienced

its “blankest year of the space age” – 266 spotless days out of 366, or 73%, a

low not seen since 1913. David Hathaway,

a solar physicist at NASA’s Marshall Space Flight Center, noted that sunspot

counts were at a 50-year low, meaning that “we’re experiencing a deep minimum

of the solar cycle.” At time of writing, the figure for 2009 was

78 spotless days out of 90, or 87%, and the Goddard Space Flight Centre was

calling it a “very deep solar minimum” – “the quietest Sun we’ve seen in almost

a century.”

This very low solar

activity corresponds with “a 50-year record low in solar wind pressure”

discovered by the Ulysses spacecraft. The fact that we are simultaneously

experiencing both extremely low solar wind pressure and sustained global cooling, incidentally, may be considered prima facie circumstantial corroboration

of Svensmark’s cosmic-ray cloud nucleation thesis.

Figure 17 - Solar cycle

lengths 1750-2007; and the 2 longest cycles of the past 300 years

Measured between

minima, the average length of a solar cycle is almost exactly 11 years. The length of the current solar cycle (Solar

Cycle 23, the mathematical minimum for which occurred in May 1996), was, as of

1 April 2009, a little over 12.9 years. This is already well over the mean, and at

time of writing, the minimum was continuing to deepen, with no indication that

the next cycle has begun. Only one solar cycle in the past three

centuries has exceeded that length – solar cycle 4, which lasted 13.66 years,

1784 to 1798 (see figure 17). This was

the last cycle before the Dalton Minimum, a period of lower-than-average global

temperatures that lasted from approximately 1790-1830. The Dalton Minimum was

the last prolonged “cold spell” of the Little Ice Age, from which temperatures have

since been recovering (and which, as noted above, the IPCC and the proponents

of the AGW thesis invariably take as the start-point for their temperature

graphs, in a clear demonstration of the end-point fallacy in statistical

methodology). On the basis of observations of past solar

activity, some solar physicists are predicting that the coming solar cycle is

likely to be weaker than normal, and could result in a period of cooling

similar to the Dalton Minimum.

If we were to

experience a similar solar minimum today – which is not unlikely, given that,

as noted above, we are emerging from an 80+-year Solar Grand Maximum, during

which the Sun was more active than at any time in the past 11,000 years – the

net result could be a global temperature decline on the order of 1.5 degrees

over the space of two solar cycles, i.e. a little over two decades. According to Archibald, during the Dalton

Minimum, temperatures in central England dropped by more than a degree over a

20-year period, for a cooling rate of more than 5ºC per century; while one

location in Germany – Oberlach – recorded a decline of 2ºC during the same

period (a cooling rate of 10ºC per century). Archibald predicts a decline of 1.5ºC over

the course of two solar cycles (roughly 22 years), for a cooling rate of 6.8ºC

per century. This would be cooling at a

rate more than ten times faster than the warming that has been observed since

the mid-1800s. “At this rate,” Monckton

notes wryly, “by mid-century, we shall be roasting in a new ice age.”

Well, since predictive analysis ought to be

subject to review, what do things look like today, three years after that paper

was written? Let's turn to NASA's David Hathaway, who - as a solar

physicist - continues to track and refine predictions for the depth and

duration of the next solar cycle. Back in 2006, Hathaway, looking at the

Sun's internal "conveyor belt", predicted that the next solar cycle -

#24, the one we're currently in - would be higher than cycle #23, and that #25

would be lower.

(Source: NASA, David

Hathaway, "Solar Cycle 25 peaking around 2022 could be one of the weakest

in centuries", 10 May 2006 [Note B])

Hathaway's prediction was based on the conveyor

belt model (look it up if you're interested). In 2010, two different

authors, Matthew Penn and William Livingston of the National Solar Observatory,

developed a physical model based instead on the measured magnetic fields

of sunspots. Based on their

model, which seems to have greater predictive validity, they argued that the

sunspot number would not be low (75 or so) like Hathaway predicted, but

exceptionally low, less than a tenth of that - a total peak sunspot number of

around 7, or the lowest in observed history.

Independent of the normal solar cycle, a

decrease in the sunspot magnetic field strength has been observed using the

Zeeman-split 1564.8nm Fe I spectral line at the NSO Kitt Peak McMath-Pierce

telescope. Corresponding changes in sunspot brightness and the strength of

molecular absorption lines were also seen. This trend was seen to continue in

observations of the first sunspots of the new solar Cycle 24, and extrapolating

a linear fit to this trend would lead to only half the

number of spots in Cycle 24 compared to Cycle 23, and imply virtually

no sunspots in Cycle 25. [Note C]

The authors predict that umbral magnetic field

strength will drop below 1500 Gauss between 2017 and 2022. Below 1500

Gauss, no sunspots will appear. This is what the predictive chart

from their paper looks like:

For the record, that's not a happy prediction.

A maximum sunspot number of 7 is virtually unheard-of in the historical

record. Using the Penn-Livingston model and NASA's SSN data, David

Archibald, another solar expert, has projected sunspot activity over cycle

25...and this is what it looks like [Note D]:

First, note that

measured data have already

invalidated Hathaway's 2006 prediction about solar cycle 24; turns out

it is proving to be considerably weaker than cycle 23. If Penn

and Livingston are correct, however, cycle 25 could be less than 1/5th the

strength of cycles 5 and 6, which in the first quarter of the 19th Century

marked the depths of the Dalton Minimum. During this period, as noted

above, temperatures plummeted, leading to widespread crop failures, famine,

disease, and the delightful sociological and meteorological conditions that

entertained Napoleon during the retreat from Moscow, and that Charles

Dickens spent most of his career writing about. A SSN of less than 10, in

fact, would be considerably

lower

than the Dalton Minimum; it would put the world into conditions not seen since

the Maunder Minimum in the 17th Century, which was even worse.

How significant could this be? Well, look

at the above chart. The last time there was an appreciable dip in

solar activity - cycle 20 (October 1964 - June 1976), the smoothed SSN curve

peaked at 110, more than 10 times as strong as cycle 25 is expected to

be...and the entire world went through a "global cooling"

scare. In 1975, Newsweek

published an article entitled “The Cooling World”, claiming, amongst other

things, that “[t]he evidence in support of these predictions [of global

cooling] has now begun to accumulate so massively that meteorologists are

hard-pressed to keep up with it.” The

article's author had some grim advice for politicians struggling to come to

grips with the impending cooling: “The longer the planners delay, the more

difficult will they find it to cope with climatic change once the results

become grim reality.” [Note E]

Sound familiar?

That was 36 years ago. Now here we are in solar cycle 24, which is

looking to be a good 20% weaker than solar cycle 20, which prompted all the

cooling panic. Temperatures are once

more measurably declining. Models of

solar activity (models based on observed data, remember, not

hypothetical projections of mathematically-derived nonsense) project that the

modern solar grand maximum that has driven Earth's climate for the last 80+

years is almost certainly over. This

means that, based on historical experience, the coming solar cycles will

probably be weak, and the Earth's climate is probably going to cool

measurably. "Global warming"

is done like dinner, and to anyone with the guts to look at the data and the

brains to understand it, there is no correlation whatsoever between average

global temperature and atmospheric carbon dioxide concentrations (let alone the

tiny proportion of atmospheric CO2 resulting from human activities). The only questions left are (a) whether we

are on our way to a Dalton-type Minimum, where temperatures drop a degree or

two, or a much worse Maunder-type Minimum, where temperatures drop more than

two degrees; and (b) whether we have the intelligence and common sense as a

species to pull our heads out of our nether regions, look at the data, stop

obsessing about things that are demonstrably not happening, and start preparing

for the Big Chill.

Based on observed data, I'm guessing we're

definitely in for colder weather - but I'm doubtful that we have the mental

capacity to recognize that fact and do something about it. Like, for example, develop the energy

resources that we're going to need if we're going to survive a multidecadal

cold spell.

Oh, and why is this relevant? Well, because we live in Canada. A new solar minimum - the Eddy Minimum, as

some are beginning to call it - would severely impact Canada's agricultural

capacity. As David Archibald pointed out

in a lecture last year (ref F), Canada's grain-growing belt is currently at a

geographic maximum, thanks to the present warm period (the shaded area in the

image below). However, during the last

cooling event (the one capped off by Solar Cycle 20, above), the grain belt

shrank to the dotted line in the image.

A decline in average temperature of 1 degree - which would be consistent

with a Dalton Minimum-type decline in temperatures - would shrink the grain

belt to the solid black line; and a 2-degree drop in temperature would push

that black line south, to the Canada-US border.

In other words, if we were to experience a drop

in temperatures similar to that experienced during the Maunder Minimum - which,

if Solar Cycle 25 is as weak as Penn-Livingston suggest it might be, is a

possibility - then it might not be possible to grow grain in Canada.

This could be a problem for those of us who enjoy

the simple things in life, like food. And an economy.

So what we should be asking ourselves

is this: What's a bigger threat to Canada's national security?

·

A projected temperature increase of 4 degrees C

over the next century that, according to all observed data, simply isn't

happening - but that if it was, wouldn't improve our agricultural capacity

one jot, because that shaded area is a soil-geomorphic limit, and sunshine

doesn't turn muskeg or tundra into fertile earth no matter how warm

it gets; [Note G]

or

·

A decline in temperatures of 1-2

degrees C over the next few decades that, according to all observed and

historical data, could very well be on the way - and which, if it

happens, might make it impossible to grow wheat in the Great White

North?

You decide. I'll be looking for

farmland in Niagara.

Cheers,

//Don//

------------------------------------------

27 January 2012 – Update to

‘Sunshine’, etc.

Colleagues,

It figures that the same day I send out a TPI

featuring a 6-month-old slide, the author of the slide would publish an

update.[Note A] I thought I'd send it along as the refinements to his

arguments sort of emphasize the reason that we should be taking a closer

look at the question of what might really be happening to climate, and why.

David Archibald, who produced that grain-belt

map I cited above, has refined his projection based on new high-resolution

surface temperature studies from Norway, and on new solar activity data,

including data on the cyclical "rush to the poles" of sunspot clusters,

of which our understanding has improved considerably over the past five

years. Here's how one paper puts it:

“Cycle 24 began its migration at a rate

40% slower than the previous two solar cycles, thus indicating the possibility

of a peculiar cycle. However, the onset of the “Rush to the Poles” of polar

crown prominences and their associated coronal emission, which has been a

precursor to solar maximum in recent cycles (cf. Altrock 2003), has just

been identified in the northern hemisphere. Peculiarly, this “rush” is leisurely,

at only 50% of the rate in the previous two cycles.”

So what? Well, it means that cycle 24 is

likely to be a lot longer than normal. And what are the consequences of

that, you ask?

If Solar Cycle 24 is progressing at 60%

of the rate of the previous two cycles, which averaged ten years long, then it

is likely to be 16.6 years long. This is supported by examining Altrock’s

green corona diagram from mid-2011 above. In the previous three cycles,

solar minimum occurred when the bounding line of major activity (blue)

intersects 10° latitude (red). For Solar Cycle 24, that occurs in 2026,

making it 17 years long.

The first solar cycle of the Maunder Minimum

was 18 years long. That's the last time the world saw solar cycles as

long as the coming cycles are projected to be.

For humanity, that is going to be

something quite significant, because it will make Solar Cycle 24 four years

longer than Solar Cycle 23. With a temperature – solar cycle length

relationship for the North-eastern US of 0.7°C per year of solar cycle length,

temperatures over Solar Cycle 25 starting in 2026 will be 2.8°C colder than

over Solar Cycle 24, which in turn is going to be 2.1°C colder than Solar Cycle

23.

The

total temperature shift will be 4.9°C for the major agricultural belt that

stretches from New England to the Rockies straddling the US – Canadian

border. At the latitude of the US-Canadian border, a 1.0°C change in

temperature shifts growing conditions 140 km – in this case, towards the

Gulf of Mexico. The centre of the Corn Belt, now in Iowa, will

move to Kansas.

Emphasis added.

Now remember, that's the center

of the corn belt. What about the northern fringes of it?

Here's Archibald's updated grain belt map. Note the newly-added last

line:

"All over." That's 25-30 years

from now - or as some folks around this building like to call it,

"Horizon Three". And here we are, still talking about

melting sea ice, thawing permafrost, and building deep-water ports in the

Arctic. Maybe we should be talking about building greenhouses in

Lethbridge and teaching beaver trapping in high school.

Just something to think about the next time

somebody starts rattling on about how the science is settled and global warming

is now inevitable. We'd better hope they're right, because the alternative

ain't pretty.

Cheers...I guess.

//Don//

Notes

----------------------------------------------------------

Notes (From original post)

A)

4th AR WG1, Chapter 2, 137.

E)

Peter Gwynne, “The Cooling World”, Newsweek,

28 April 1975, page 64.

G) H/T to Neil for the "sunshine doesn't

turn muskeg into black earth" line.

Based on current science, the

date for the solar minimum ending a prior cycle is generally determined from

sunspot counts and is generally agreed by scientists post-facto, once the

subsequent cycle is under way. However,

in addition to very low smoothed sunspot numbers, solar minima are also defined

in terms of peaks in cosmic rays (neutrons) striking the Earth (because the

Sun’s magnetic field, which shields the Earth from cosmic rays, is weakest

during the solar minimum. For more

information on this point, see chapter 5).

Because the neutron counts, at time of writing, were still increasing,

it is unlikely that the solar minimum separating solar cycles 23 and 24 has yet

been reached. See Anthony Watts, “Cosmic

Ray Flux and Neutron monitors suggest we may not have hit solar

minimum yet”, wattsupwiththat.com, 15 March 2009 [http://wattsupwiththat.com/2009/03/15/cosmic-ray-flux-and-neutron-monitors-suggest-we-may-not-have-hit-solar-minimum-yet/#more-6208]. For anyone interesting in charting the

neutron flux data for themselves, these can be obtained from the website of the

University of Delaware Bartol Research Institute Neutron Monitor Program

[http://neutronm.bartol.udel.edu/main.html#stations].